REVISED August 13th 2017

Almost all mature deep neural network (DNN) libraries e.g. Tensor Flow, Theano, Caffe, and etc are written in python, not in C/C++. We will hardly find library for DNN written in C/C++. Even if we find one, it requires heavy resources. Fortunately, we now have tiny-dnn. tiny-dnn is a C++11 implementation of deep learning. Nothing needs to be compiled, header only. It is suitable for deep learning on limited computational resource, embedded systems and IoT devices.

For new tiny-dnn user, it may hard to get used with the environment because the examples provided are directly designated to solve MNIST or CIFAR problem. On this post, I try to give example to solve simple problem (XOR) using tiny-dnn. It may sound excessive to use DNN framework only to solve XOR problem,. But for the sake of better understanding of framework structure, I think it’s okay to do so.

In this example, we will separate the train and test routines. The train routine will fit input against output and save the trained network into binary file. Then the test routine will read the trained network binary file and use it to yield result.

Step 0 : Preparing workspace

Assume we work on directorydnn-dirthat we will createmkdir dnn-dir cd dnn-dir touch train.cc touch test.ccStep 1 : Cloning tiny-dnn

First, we need too clone tiny-dnn from github :git clone https://github.com/tiny-dnn/tiny-dnn.git

At this step, your folder structure should be like following :

- dnn-dir |--- tiny-dnn |--- train.cc |--- test.cc

Step 2 : defining problem

Let’s define our XOR problem as follows :

| X | Y | X Y |

|---|---|---|

| 0 | 0 | 0 |

| 0 | 1 | 1 |

| 1 | 0 | 1 |

| 1 | 1 | 0 |

Step 3 : creating trainer routine

Create our data trainer inside train.cc

#include "tiny_dnn/tiny_dnn.h"

using namespace tiny_dnn;

using namespace std;

int main(int argc, char *argv[]){

network<sequential> net;

net << layers::fc(2,3) << activation::tanh()

<< layers::fc(3,1) << activation::tanh();

vector<vec_t> input_data { {0,0}, {0,1}, {1,0}, {1,1} };

vector<vec_t> desired_out { {0}, {1}, {1}, {0} };

size_t batch_size = 1;

size_t epochs = 1000;

gradient_descent opt;

net.fit<mse>(opt, input_data, desired_out, batch_size, epochs);

double loss = net.get_loss<mse>(input_data, desired_out);

cout << "mse : " << loss << endl;

net.save("xor_net");

return 0;

}Just like the developer said, we don’t need to compile the library. All we need to do is including the library on our program (#include "tiny_dnn/tiny_dnn.h"). To simplify the program, we will use namespace tiny_dnn and std as usual.

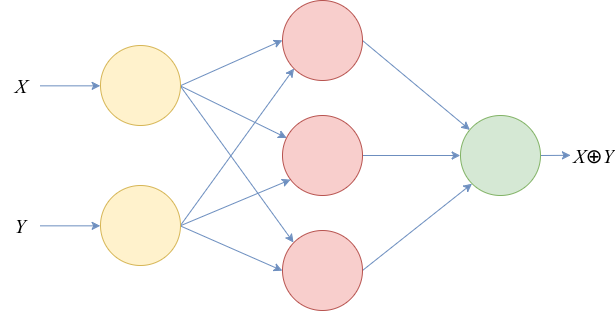

Defining new network requires declaration using type tiny_dnn::network followed by the type of network (e.g. <sequential>) and the network name (net). Then our network layers and perceptron unit numbers per layer need to be configured. tiny-dnn provide several type of layer i.e. convolution net, average pooling net, fully connected net, or just simple multi layer perceptron. For our example which is multi layer perceptron, we can use fully connected net as follows :

net << layers::fc(2,3) << activation::tanh()

<< layers::fc(3,1) << activation::tanh();The script above defines an input layer with two inputs, one hidden layer with three perceptron and tan hyperbolic activation function, and an output layer with single output. Our network should look like this :

vector<vec_t> input_data { {0,0}, {0,1}, {1,0}, {1,1} };

vector<vec_t> desired_out { {0}, {1}, {1}, {0} };tiny-dnn define new type for float array/vector called vec_t. We are gonna use it for place-holder of our XOR data.

size_t batch_size = 1;

size_t epochs = 1000;

gradient_descent opt;

net.fit<mse>(opt, input_data, desired_out, batch_size, epochs);We could adjust the training parameter by assigning value to variable batch_size and epochs and choosing optimizer algorithm (e.g. gradient_descent, RMSprop, adam, etc.). Normally, we iterate over 1000 epochs and use gradient_descent algorithm. Then, we can train our net using command fit. Because of our problem is fitting problem, we use fit. But, if our problem is classification problem, we need train command.

double loss = net.get_loss<mse>(input_data, desired_out);

cout << "mse : " << loss << endl;

net.save("xor_net");Finally, we get our result. We may know how good our net is by get_loss function. We are also able to save the trained network using save function.

- Step 4 : creating test routine

Create our test routine insidetest.cc

#include "tiny_dnn/tiny_dnn.h"

#include <cstdlib>

using namespace tiny_dnn;

using namespace std;

int main(int argc, char *argv[]){

network<sequential> net;

net.load("xor_net");

vec_t in = {atof(argv[1]), atof(argv[2])};

vec_t result = net.predict(in);

cout << result.at(0) << endl;

return 0;

}All we do is loading the trained xor_net.

- Step 5 : compiling and testing…

Compile the file usingg++and add option forpthread.

g++ -std=c++14 -Itiny-dnn -pthread -O2 train.cc -o train

g++ -std=c++14 -Itiny-dnn -pthread -O2 test.cc -o testThe program should give result as follows :

$ ./train

mse : 0.0360134

$ ./test 0 0

0.0892

$ ./test 0 1

0.9934

$ ./test 1 0

0.9874

$ ./test 1 1

0.0986That’s all the program to solve XOR problem using tiny-dnn. You may configure the network for more complicated architecture to solve more complex problem. In near future, tiny-dnn will support GPU processing to make our net more powerful.

Correct me if I am wrong

Sincerely,

Tirtadwipa Manunggal

tirtadwipa.manunggal@gmail.com

So, I'm trying to run your examples, but I keep on getting a bunch of errors when trying to compile with g++ using the commands written here. These errors seem to be in TinyDNN, but I am able to successfully compile the examples with no problem. I am using your steps exactly. Is there something missing that I am just not understanding?

ReplyDeleteDear Unknown, I am sorry because I had barely maintained this blog. It seems the developer had changed how layer template should be declared. I have revised my code up there for recent codes. And also it's my mistake to add flag for C++11, it should be C++14. You could rerun the codes and it should be working. Please let me know if you have any difficulties. Enjoy your time.

DeleteThanks! That worked perfectly!

DeleteThank you for your quick response!

Great tutorial Mr. Tirta! just to avoid warning, I think it should be "float" type in front of `vec_t in` and `vec_t result`. am I right..?

ReplyDeleteI don't think so. 'vec_t' is a user-defined type as we can see at tiny_dnn/util/util.h line 63. It had been explicitly stated that vec_t is a float-typed linked-list. Thus giving type float in front of vec_t calling is not necessary and might cause syntax error.

DeleteThanks for this nice tutorial.

ReplyDeleteWould you accept it to be merged into the tiny-dnn github?

Thanks for this nice tutorial.

ReplyDeleteWould you accept it to be merged int the tiny-dnn github?

Dear Etienne, I would be so pleased :D

Delete